Google Cloud Platform (GCP)

Google Cloud consists of a set of physical assets, such as computers and hard disk drives, and virtual resources, such as virtual machines (VMs), that are contained in Google's data centers around the globe. Each data center location is in a region. Regions are available in Asia, Australia, Europe, North America, and South America. Google lists over 90 products under the Google Cloud brand.Some of the key services are listed below.

Google Compute Engine (GCE):

GCE is Google’s IaaS offering. With GCE you have the liberty of creating Virtual Machines, allocating CPU and Memory, Kind of Storage e.g. SSD or HDD, as well as the amount of storage. It’s almost like you get to build your own computer/workstation and handle all the details of running it.

Each machine type family includes different machine types. Each family is curated for specific workload types.

Compute Engine Types:

General-purpose:General-purpose machine types offer the best price-performance ratio for a variety of workloads.

- E2 machine types are cost-optimized VMs that offer up to 32 vCPUs with up to 128 GB of memory with a maximum of 8 GB per vCPU. E2 machines have a predefined CPU platform running either an Intel or the second generation AMD EPYC Rome processor.

- N2 machine types offer up to 80 vCPUs, 8 GB of memory per vCPU, and are available on the Intel Cascade Lake CPU platforms.

- N2D machine types offer up to 224 vCPUs, 8 GB of memory per vCPU, and are available on second generation AMD EPYC Rome platforms.

- N1 machine types offer up to 96 vCPUs, 6.5 GB of memory per vCPU, and are available on Intel Sandy Bridge, Ivy Bridge, Haswell, Broadwell, and Skylake CPU platforms.

Memory-optimized:Memory-optimized machine types are ideal for memory-intensive workloads because they offer more memory per core than other machine types, with up to 12 TB of memory.

Compute-optimized:Compute-optimized machine types offer the highest performance per core on Compute Engine and are optimized for compute-intensive workloads. Compute-optimized machine types offer Intel Scalable Processors (Cascade Lake) and up to 3.8 GHz sustained all-core turbo.

Accelerator-optimized:Accelerator-optimized machine types are ideal for massively parallelized CUDA compute workloads, such as machine learning (ML) and high performance computing (HPC).

Images:

Use operating system images to create boot disks for your instances. You can use one of the following image types:

- Public images are provided and maintained by Google, open source communities, and third-party vendors. By default, all Google Cloud projects have access to these images and can use them to create instances.

- Custom images are available only to your Cloud project. You can create a custom image from boot disks and other images. Then, use the custom image to create an instance.

You can use most public images at no additional cost, but there are some premium images that do add additional cost to your instances. Custom images that you import to Compute Engine add no cost to your instances, but do incur an image storage charge while you keep your custom image in your project.

Storage Options:

Compute Engine offers several types of storage options for your instances. Each of the following storage options has unique price and performance characteristics:

- Zonal persistent disk: Efficient, reliable block storage.

- Regional persistent disk: Regional block storage replicated in two zones.

- Local SSD: High performance, transient, local block storage.

- Cloud Storage buckets: Affordable object storage.

- Filestore: High performance file storage for Google Cloud users.

Disk Types:

When you configure a zonal or regional persistent disk, you must select one of the following disk types:

Standard persistent disks (pd-standard) are backed by standard hard disk drives (HDD).

Balanced persistent disks (pd-balanced) are backed by solid-state drives (SSD). They are an alternative to SSD persistent disks that balance performance and cost.

SSD persistent disks (pd-ssd) are backed by solid-state drives (SSD).

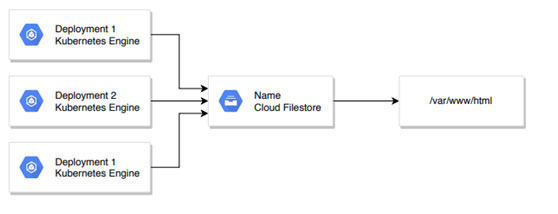

Filestore:

Filestore is a fully managed, NoOps service. Easily mount file shares on Compute Engine VMs. Filestore is also tightly integrated with Google Kubernetes Engine so containers can reference the same shared data.

With High Scale, meet the needs of your high- performance business. If requirements change, easily grow or shrink your instances via the Google Cloud Console GUI, gcloud command line, or via API-based controls.

Filestore provides a consistent view of your filesystem data and steady performance over time. With speeds up to 480K IOPS and 16 GB/s, trust your infrastructure to handle your highest performance workloads.

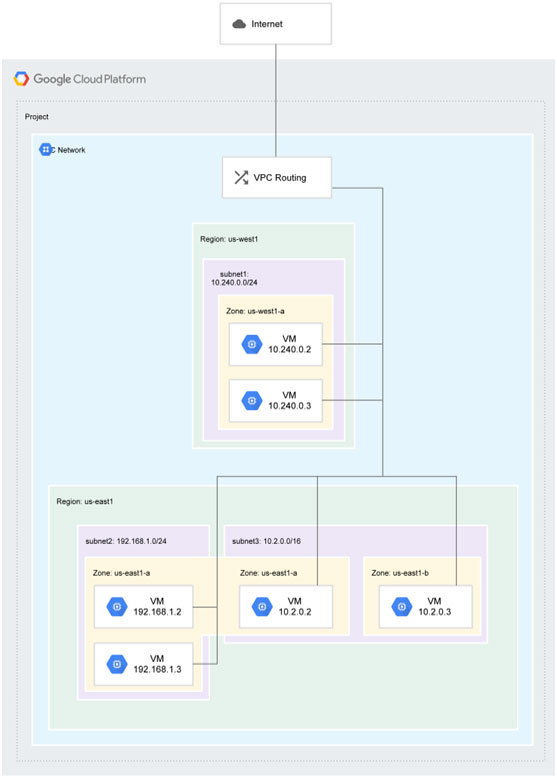

VPC (Virtual Private Cloud):

Virtual Private Cloud (VPC) provides networking functionality to Compute Engine virtual machine (VM) instances, Google Kubernetes Engine (GKE) clusters, and the App Engine flexible environment. VPC provides networking for your cloud-based resources and services that is global, scalable, and flexible.

VPC Networks— You can think of a VPC network the same way you'd think of a physical network, except that it is virtualized within Google Cloud. A VPC network is a global resource that consists of a list of regional virtual subnetworks (subnets) in data centers, all connected by a global wide area network. VPC networks are logically isolated from each other in Google Cloud.

A VPC network provides the following:

- Provides connectivity for your Compute Engine virtual machine (VM) instances, including Google Kubernetes Engine (GKE) clusters, App Engine flexible environment instances, and other Google Cloud products built on Compute Engine VMs.

- Offers built-in Internal TCP/UDP Load Balancing and proxy systems for Internal HTTP(S) Load Balancing.

- Connects to on-premises networks using Cloud VPN tunnels and Cloud Interconnect attachments.

- Distributes traffic from Google Cloud external load balancers to backends.

Firewall Rules — Each VPC network implements a distributed virtual firewall that you can configure. Firewall rules allow you to control which packets are allowed to travel to which destinations. Every VPC network has two implied firewall rules that block all incoming connections and allow all outgoing connections.

Routes — Routes tell VM instances and the VPC network how to send traffic from an instance to a destination, either inside the network or outside of Google Cloud. Each VPC network comes with some system-generated routes to route traffic among its subnets and send traffic from eligible instances to the internet.You can create custom static routes to direct some packets to specific destinations.

Forwarding Rules — While routes govern traffic leaving an instance, forwarding rules direct traffic to a Google Cloud resource in a VPC network based on IP address, protocol, and port.Some forwarding rules direct traffic from outside of Google Cloud to a destination in the network; others direct traffic from inside the network. Destinations for forwarding rules are target instances, load balancer targets (target proxies, target pools, and backend services), and Cloud VPN gateways.

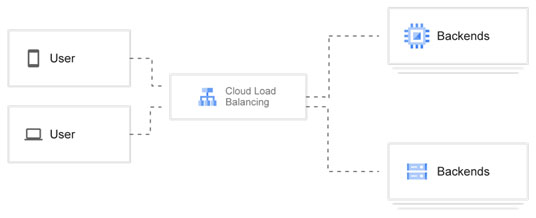

Cloud Load Balancing

Google Cloud offers the following load balancing configurations to distribute traffic and workloads across many VMs:

- Global external load balancing, including HTTP(S) Load Balancing, SSL Proxy Load Balancing, and TCP Proxy Load Balancing.

- Regional external network load balancing.

- Regional internal load balancing.

Cloud Load Balancers:

Google Cloud load balancers can be divided into external and internal load balancers:

External load balancers distribute traffic coming from the internet to your Google Cloud Virtual Private Cloud (VPC) network. Global load balancing requires that you use the Premium Tier of Network Service Tiers. For regional load balancing, you can use Standard Tier.

Internal load balancers distribute traffic to instances inside of Google Cloud.

Traffic type

The type of traffic that you need your load balancer to handle is another factor in determining which load balancer to use:

For HTTP and HTTPS traffic, use:

- External HTTP(S) Load Balancing

- Internal HTTP(S) Load Balancing

For TCP traffic, use:

- TCP Proxy Load Balancing

- Network Load Balancing

- Internal TCP/UDP Load Balancing

For UDP traffic, use:

- Network Load Balancing

- Internal TCP/UDP Load Balancing

Cross - Region Load Balancing:

When you configure an external HTTP(S) load balancer in Premium Tier, it uses a global external IP address and can intelligently route requests from users to the closest backend instance group or NEG, based on proximity. For example, if you set up instance groups in North America, Europe, and Asia, and attach them to a load balancer's backend service, user requests around the world are automatically sent to the VMs closest to the users, assuming the VMs pass health checks and have enough capacity (defined by the balancing mode). If the closest VMs are all unhealthy, or if the closest instance group is at capacity and another instance group is not at capacity, the load balancer automatically sends requests to the next closest region with capacity.

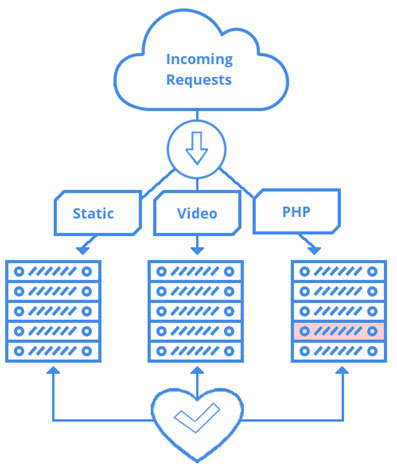

Content Based Load Balancing:

HTTP(S) Load Balancing supports content-based load balancing using URL maps to select a backend service based on the requested host name, request path, or both. For example, you can use a set of instance groups or NEGs to handle your video content and another set to handle everything else.You can also use HTTP(S) Load Balancing with Cloud Storage buckets. After you have your load balancer set up, you can add Cloud Storage buckets to it.

Instance Group:

An instance group is a collection of virtual machine (VM) instances that you can manage as a single entity.

Compute Engine offers two kinds of VM instance groups, managed and unmanaged:

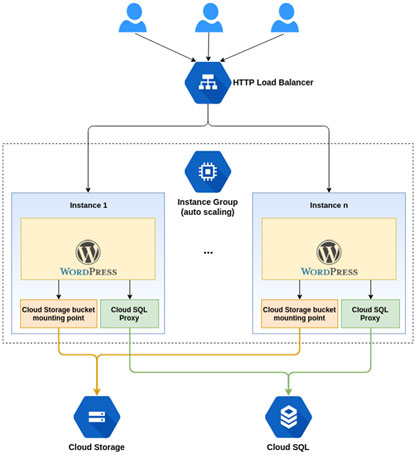

- Managed instance groups (MIGs) let you operate apps on multiple identical VMs. You can make your workloads scalable and highly available by taking advantage of automated MIG services, including: autoscaling, autohealing, regional (multiple zone) deployment, and automatic updating.

- Unmanaged instance groups let you load balance across a fleet of VMs that you manage yourself.

Autoscaling Groups of Instances:

Managed instance groups (MIGs) offer autoscaling capabilities that let you automatically add or delete virtual machine (VM) instances from a MIG based on increases or decreases in load. Autoscaling helps your apps gracefully handle increases in traffic and reduce costs when the need for resources is lower. You define the autoscaling policy and the autoscaler performs automatic scaling based on the measured load.

Autoscaling works by adding more VMs to your MIG when there is more load (scaling out, sometimes referred to as scaling up), and deleting VMs when the need for VMs is lowered (scaling in or down).

Fundamentals of Autoscaling:

Autoscaling uses the following fundamental concepts and services.

Managed instance groups:Autoscaling is a feature of managed instance groups (MIGs). A managed instance group is a collection of virtual machine (VM) instances that is created from a common instance template. An autoscaler adds or deletes instances from a managed instance group.

Autoscaling policies:To create an autoscaler, specify one or more autoscaling policies. Autoscaling policies configure which inputs autoscaler uses to determine how to scale your instance group.

Cool down period:While an instance is initializing, information about its usage might not reflect normal circumstances, so that usage information might not be reliable for autoscaler decisions and you might want to omit that data.

Stabilization period:For the purposes of scaling in, the autoscaler calculates the group's recommended target size based on peak load over the last 10 minutes. These last 10 minutes are referred to as the stabilization period.Using the stabilization period, the autoscaler ensures that the recommended size for your managed instance group is always sufficient to serve the peak load observed during the previous 10 minutes.

Autoscaling mode:If you need to investigate or configure your group without interference from autoscaler operations, you can temporarily turn off or restrict autoscaling activities. The autoscaler's configuration persists while it is turned off or restricted, and all autoscaling activities resume when you turn it on again or lift the restriction.

Scale-in controls:If your workloads take many minutes to initialize (for example, due to lengthy installation tasks), you can reduce the risk of response latency caused by abrupt scale-in events by configuring scale-in controls. Specifically, if you expect load spikes to follow soon after declines, you can limit the scale-in rate to prevent autoscaling from reducing a MIG's size by more VM instances than your workload can tolerate.

GCP Serverless Computing:

Google Cloud Serverless computing makes scalability of the server to infinity, i.e., there will be no load management, it has to run code or function on request or event, if there will be parallel requests, there will be function running for each particular application. We have covered in great length the serverless computing.

GCP Serverless Computing Provides:

- No need for Managing server

- Fully Managed Security

- Pay only for what you use

- Flexible environment for developer

- Easy to understand interface

- Various types of options regarding Full-Stack Serverless

- GCP provides with everything one needs to build an end to end serverless application

- It helps you entirely focus your attention to the code only rest everything is taken care of by GCP

- Deployment is quite fast, and you can go global as soon as you complete every formality regarding the system.

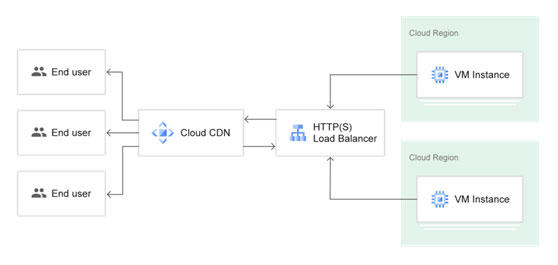

Cloud CDN (Content Delivery Network):

Cloud CDN (Content Delivery Network) uses Google's global edge network to serve content closer to users, which accelerates your websites and applications.Cloud CDN works with external HTTP(S) Load Balancing to deliver content to your users. The external HTTP(S) load balancer provides the frontend IP addresses and ports that receive requests and the backends that respond to the requests.

When a user requests content from an external HTTP(S) load balancer, the request arrives at a Google Front End (GFE), which is at the edge of Google's network as close as possible to the user.

If the load balancer's URL map routes traffic to a backend service or backend bucket that has Cloud CDN configured, the GFE uses Cloud CDN.

Cloud DNS:

Cloud DNS lets you publish your zones and records in DNS without the burden of managing your own DNS servers and software.

Cloud DNS offers both public zones and private managed DNS zones. A public zone is visible to the public internet, while a private zone is visible only from one or more Virtual Private Cloud (VPC) networks that you specify.

DNS Forwarding Methods:

Google Cloud offers inbound and outbound DNS forwarding for private zones. You can configure DNS forwarding by creating a forwarding zone or a Cloud DNS server policy. The two methods are summarized:

Inbound:Create an inbound server policy to enable an on-premises DNS client or server to send DNS requests to Cloud DNS. The DNS client or server can then resolve records according to a VPC network's name resolution order.

On-premises clients can resolve records in private zones, forwarding zones, and peering zones for which the VPC network has been authorized. On-premises clients use Cloud VPN or Cloud Interconnect to connect to the VPC network.

Outbound:You can configure VMs in a VPC network to do the following:

- Send DNS requests to DNS name servers of your choosing. The name servers can be located in the same VPC network, in an on-premises network, or on the internet.

- Resolve records hosted on name servers configured as forwarding targets of a forwarding zone authorized for use by your VPC network.

- Create an outbound server policy for the VPC network to send all DNS requests an alternative name server. When using an alternative name server, VMs in your VPC network are no longer able to resolve records in Cloud DNS private zones, forwarding zones, or peering zones.

GCP Databases:

GCP offers the following database management tools:

Bare Metal Solution:

There are many workloads that are easy to lift and shift to the cloud, but there are also specialized workloads that are difficult to migrate to a cloud environment. These specialized workloads often require certified hardware and complicated licensing and support agreements. This solution provides a path to modernize your application infrastructure landscape, while maintaining your existing investments and architecture. With Bare Metal Solution, you can bring your specialized workloads to Google Cloud, allowing you access and integration with GCP services with minimal latency.

Cloud SQL:

Cloud SQL automatically ensures your databases are reliable, secure, and scalable so that your business continues to run without disruption. Cloud SQL automates all your backups, replication, encryption patches, and capacity increases—while ensuring greater than 99.95% availability, anywhere in the world.Access Cloud SQL instances from just about any application. Easily connect from App Engine, Compute Engine, Google Kubernetes Engine, and your workstation. Open up analytics possibilities by using BigQuery to directly query your Cloud SQL databases.

Cloud Spanner:

Cloud Spanner is built on Google’s dedicated network and battle tested by Google services used by billions. It offers up to 99.999% availability with zero downtime for planned maintenance and schema changes.

Cloud Bigtable:

A fully managed, scalable NoSQL database service for large analytical and operational workloads.Bigtable is ideal for storing very large amounts of data in a key-value store and supports high read and write throughput at low latency for fast access to large amounts of data. Throughput scales linearly—you can increase QPS (queries per second) by adding Bigtable nodes. Bigtable is built with proven infrastructure that powers Google products used by billions such as Search and Maps.

Firestore:

Cloud-native NoSQL to easily develop rich mobile, web, and IoT applicatonsapplications using a fully managed, scalable, and serverless document database.Focus on your application development using a fully managed, serverless database that effortlessly scales up or down to meet any demand, with no maintenance windows or downtime.

Firebase Realtime Database

The Firebase Realtime Database is a cloud-hosted NoSQL database that lets you store and sync data between your users in realtime.Realtime syncing makes it easy for your users to access their data from any device: web or mobile, and it helps your users collaborate with one another.

Memorystore:

Reduce latency with scalable, secure, and highly available in-memory service for Redis and Memcached.Choose from the two most popular open source caching engines to build your applications. Memorystore supports both Redis and Memcached and is fully protocol compatible. Choose the right engine that fits your cost and availability requirements.Memorystore is protected from the internet using VPC networks and private IP and comes with IAM integration—all designed to protect your data. Systems are monitored 24/7/365, ensuring your applications and data are protected.

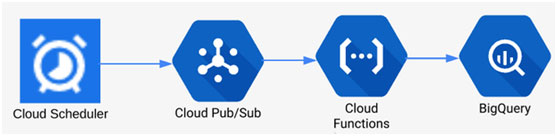

Bigquery:

Serverless, highly scalable, and cost-effective multi-cloud data warehouse designed for business agility.Rely on BigQuery’s robust security, governance, and reliability controls that offer high availability and a 99.99% uptime SLA. Protect your data with encryption by default and customer-managed encryption keys.Cloud Storage

Cloud Storage is a service for storing your objects in Google Cloud. An object is an immutable piece of data consisting of a file of any format. You store objects in containers called buckets. All buckets are associated with a project, and you can group your projects under an organization.

After you create a project, you can create Cloud Storage buckets, upload objects to your buckets, and download objects from your buckets. You can also grant permissions to make your data accessible to members you specify, or - for certain use cases such as hosting a website - accessible to everyone on the public internet.

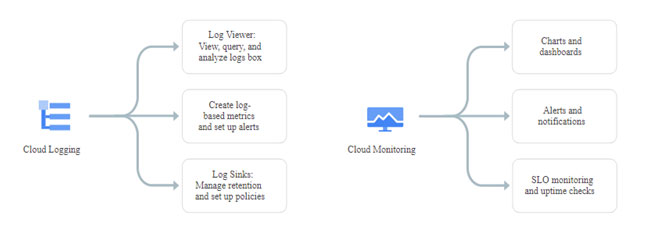

Google Cloud Operations Suite:

Monitor, troubleshoot, and improve application performance on your Google Cloud environment.

Cloud Monitoring provides visibility into the performance, uptime, and overall health of cloud-powered applications. Collect metrics, events, and metadata from Google Cloud services, hosted uptime probes, application instrumentation, and a variety of common application components; visualize them on charts and dashboards; and manage alerts.

IAM (Identity and Access Management):

Identity and Access Management (IAM) lets you create and manage permissions for Google Cloud resources. IAM unifies access control for Google Cloud services into a single system and presents a consistent set of operations.Cloud Identity and Access Management (IAM) lets administrators authorize who can take action on specific resources, giving you full control and visibility to manage Google Cloud resources centrally. For enterprises with complex organizational structures, hundreds of workgroups, and many projects, Cloud IAM provides a unified view into security policy across your entire organization, with built-in auditing to ease compliance processes.Cloud IAM provides tools to manage resource permissions with minimum fuss and high automation. Map job functions within your company to groups and roles. Users get access only to what they need to get the job done, and admins can easily grant default permissions to entire groups of users.